You don’t need to be Einstein to understand how machines learn—just the right mindset and a fresh way to see the numbers.

Why This Matters

Let’s get real—when most people hear “linear algebra,” they either freeze or flash back to some traumatic chalkboard experience. But here’s the truth: you don’t need a Ph.D. to understand the math behind AI. You just need the right lens.

If you’re a builder, creator, or just AI-curious, understanding basic linear algebra will unlock a whole new layer of confidence and insight. It’s the math that powers:

- Neural networks

- Embeddings

- Recommendations

- Generative AI (like ChatGPT!)

Let’s decode this—without jargon, without fear, and without any dry lectures.

🔢 What Is Linear Algebra, Really?

At its core, linear algebra is just math for data. Think of it as:

📦 Organizing stuff (data)

➕ Operating on it (transforming, combining, optimizing)

🔁 Repeating that process… really fast

It’s the foundation that allows AI to learn, adapt, and generate new things.

Let’s look at the 5 core building blocks—explained in plain English.

1. Vectors: Fancy Words for Lists

✅ What it is:

A vector is just a list of numbers. Example:

[1, 2, 3] Imagine you’re describing a cat with numbers:

- Fluffiness: 1

- Meow volume: 2

- Cuteness: 3

That’s a vector representation of your cat. AI uses this to understand images, words, even your preferences.

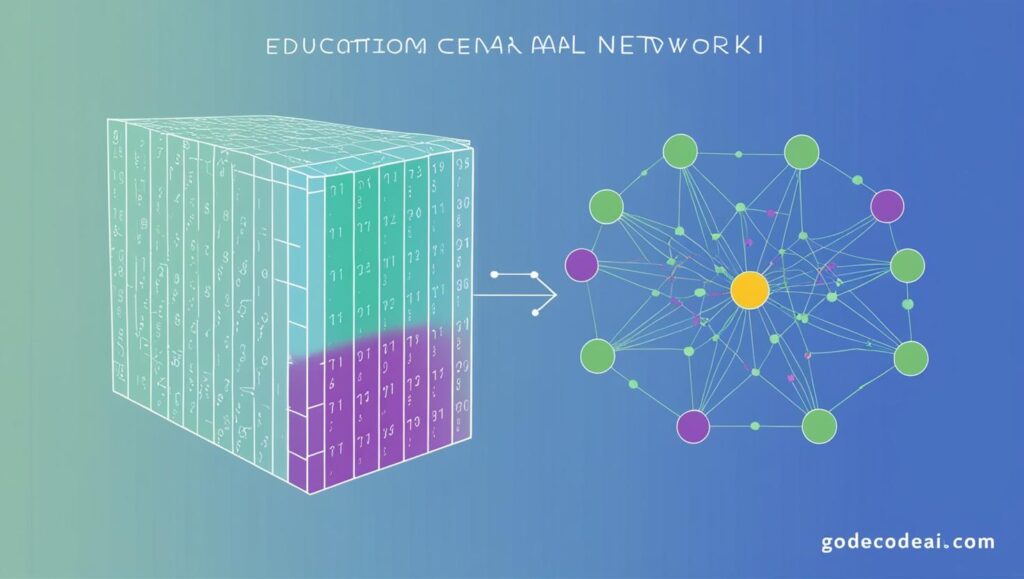

2. Matrices: Grids of Vectors

✅ What it is:

A matrix is a collection of vectors. Think Excel sheet.

[1 2 3]

[4 5 6]

[7 8 9]

This could represent 3 cats, each with their fluffiness, meow volume, and cuteness. AI processes this matrix to find patterns, similarities, and categories.

3. Dot Product: Matching Things

✅ What it is:

It’s a simple operation that tells us how similar two vectors are.

Think:

- Vector A = You

- Vector B = A job ad

- Dot product = How well you match that job

AI uses dot products to:

- Rank results

- Recommend content

- Measure word similarity (like “king” vs “queen”)

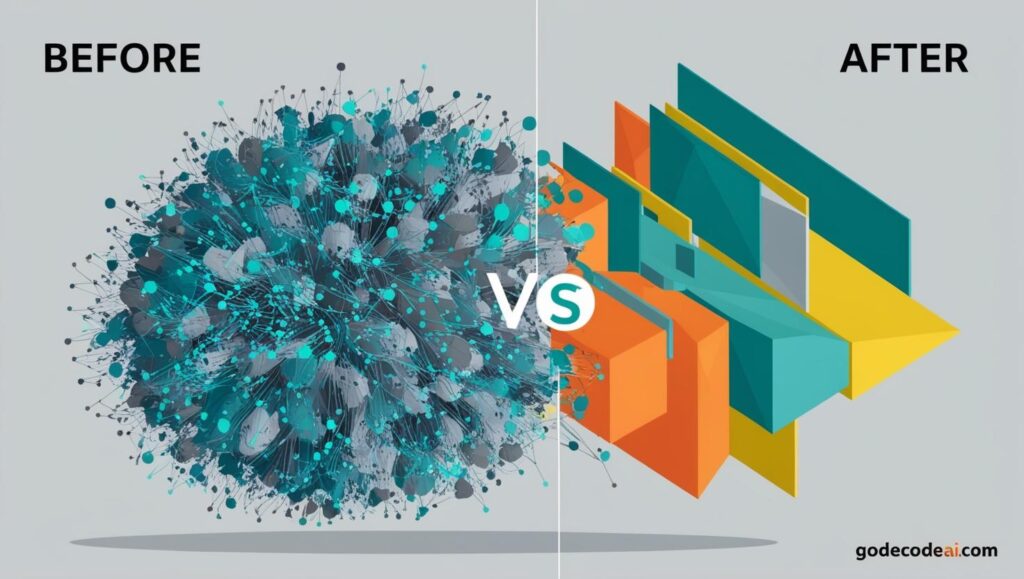

4. Matrix Multiplication: Transformation Time

✅ What it is:

This lets AI change or combine data in powerful ways.

In neural networks, every layer applies a matrix transformation to your data—learning what to keep, amplify, or forget.

Matrix multiplication is like feeding data through different lenses to find patterns.

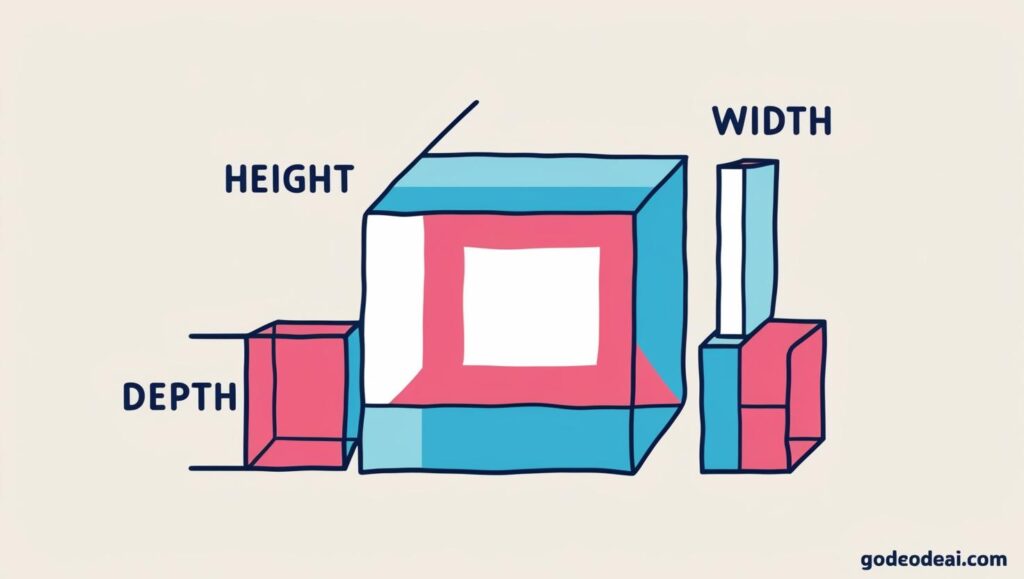

5. Eigenvectors: Finding What Really Matters

✅ What it is:

These help AI identify the most important features.

Example:

In a photo, maybe only lighting and edges matter for face detection. Eigenvectors help AI figure out which directions in your data are meaningful, and which are noise.

This is at the heart of dimensionality reduction tools like PCA (Principal Component Analysis).

🚀 So, How Does This All Power AI?

Every AI model—from chatbots to image generators—is basically doing this:

- Turning your input into vectors

- Running matrix operations to transform and compare those vectors

- Finding patterns, making decisions, and learning over time

It’s all just math on steroids, and now you know the core tricks.

💡 Real-World Examples

- Netflix uses vector similarity to recommend what you’ll like

- Spotify applies matrix math to discover your next favorite artist

- GPT models use billions of vector calculations to generate text

- AI art tools like Midjourney turn prompts into vector transformations, step-by-step

🎯 Final Take

You don’t need to memorize formulas.

You don’t need to fear the math.

Just understand the flow:

Vectors → Matrices → Operations → Patterns → Intelligence

The math behind AI is less about complexity and more about perspective. And now that you see it, you’re not just using AI.

You’re thinking like it.

❓FAQ (For Actually Quirky Minds)

Q: Do I need to learn actual math notation to use AI?

Nope. Understanding the concepts is 90% of the game.

Q: What if I want to go deeper?

Start with Khan Academy’s linear algebra or “The Essence of Linear Algebra” on YouTube. Pure gold.

Q: Is this the same math used in ChatGPT?

Yes. Transformers are powered by vector spaces, dot products, and matrices.