AI is supposed to be neutral.

But the truth? It often isn’t.

From chatbots that stereotype users to image generators that “whitewash” prompts — AI systems can reinforce racism, sexism, and other biases at scale.

The shocking part?

This bias isn’t always intentional — but it is systemic. And if you’re building or using AI tools, you’re part of the system.

Let’s break down how AI bias happens, what it looks like in the wild, and most importantly — how we can fix it.

First, What Is AI Bias?

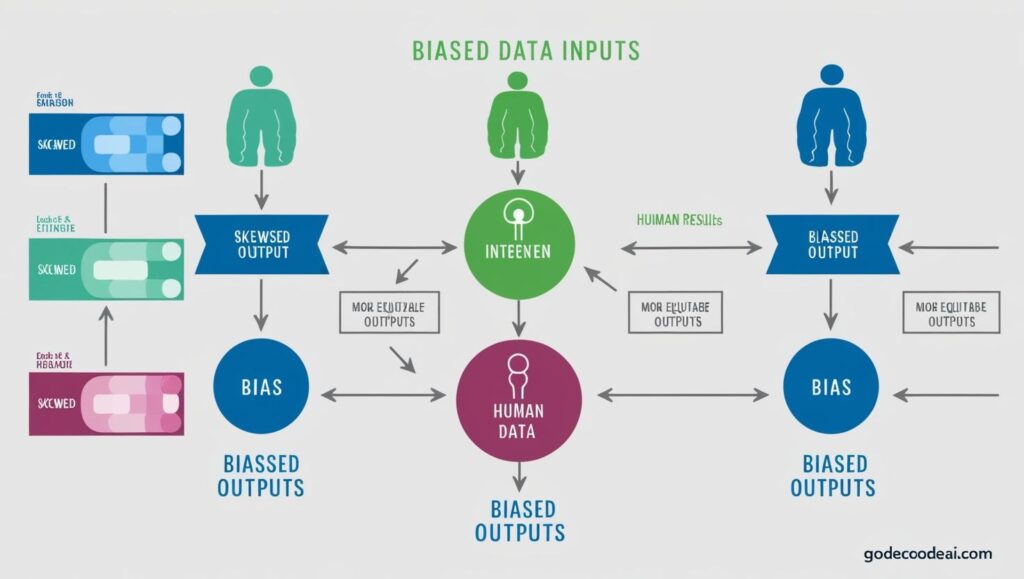

AI bias is when artificial intelligence systems produce outputs that unfairly favor or disadvantage certain groups — especially based on race, gender, or class.

It’s not just a glitch. It’s often baked in through:

- Biased training data

- Unbalanced datasets

- Poorly tested models

- Careless prompt design

AI doesn’t have opinions. It has patterns — and it learns those patterns from us.

So if we feed it biased inputs, we get biased outputs. Fast. At scale.

Real Examples of AI Bias (You Shouldn’t Ignore)

- Chatbots recommending lighter skin tones when asked for “professional headshots.”

- Facial recognition tools misidentifying Black faces at significantly higher error rates.

- Hiring algorithms filtering out resumes with “ethnic-sounding” names.

- AI art tools generating only white characters when prompted with “beautiful” or “hero.”

These aren’t accidents. They’re outcomes of how we’ve trained the system — often on data from biased histories.

Why It Happens (Even If You Didn’t Mean It To)

Here’s the uncomfortable truth:

AI reflects the world we give it. And our world has deep historical bias baked into language, images, media, and behavior.

For example:

- Language Models: Trained on internet text — which includes hate speech, stereotypes, and unequal representation.

- Image Models: Learn from datasets where minorities are underrepresented or misrepresented.

- Voice Models: Struggle with non-Western accents because the training set ignored them.

Developers don’t need to be racist for their models to be.

Bias is the default unless you actively fight it.

How to Fix It (No, It’s Not Just Adding More Data)

Fixing AI bias is hard — but not impossible.

Here’s what actually works:

1. Curate Smarter, Not Just Bigger

Don’t just scrape more data. Audit what you already have.

- Who’s overrepresented?

- Who’s invisible?

- What kind of language dominates?

2. Human-in-the-Loop Review

You need diverse humans reviewing outputs — constantly.

Bias isn’t always obvious in code. It shows up in results.

3. Bias Testing as a Feature, Not a Phase

You test for latency. You test for uptime.

Test for fairness just as aggressively — with real-world scenarios.

4. Allow User Overrides

Give users transparency. Let them control tone, representation, even cultural defaults — especially in tools like avatars, summaries, and chatbots.

5. Publish Your Model Cards Honestly

Don’t pretend your model is perfect. Say what it can and can’t do — and who it was built for.

That’s real AI ethics.

What This Means for You (Whether You’re a Dev or Just Using AI)

If you’re building AI tools:

- Test them across different demographics.

- Include diverse voices in your team — especially from groups affected by AI bias.

- Treat fairness like a product feature, not a PR checkbox.

If you’re just using them:

- Don’t blindly trust results.

- Call out bias.

- Choose tools that take ethics seriously — not just performance.

The future of AI is not just faster. It has to be fairer.

FAQs

Q: Is AI bias always intentional?

A: No. In fact, most AI bias is unintentional — it’s the side effect of biased data or careless design.

Q: Are open-source AI models less biased?

A: Not necessarily. Bias depends on the training set and oversight — open or closed. Transparency helps, but isn’t a fix alone.

Q: Can you fully eliminate AI bias?

A: No — but you can minimize it sharply through audits, diverse testing, and better data practices.

Q: Is it racist to say an AI chatbot is racist?

A: No. It’s accurate if the outputs reinforce harmful stereotypes. Calling it out is the first step toward building better tech.

Q: How do I test AI for bias myself?

A: Use diverse prompts. Vary race, gender, accents, and contexts. Compare how the AI responds. Patterns will emerge.

Final Thought

AI bias isn’t just a tech issue.

It’s a mirror — and we don’t always like what we see.

But we get to choose:

Build smarter mirrors. Or keep repeating the same patterns.

The next generation of AI can be better.

But only if we build it that way — on purpose.